Hi All,

I have two entities appointment date and Date of birth in different dialog tasks. I would like to train the bot in such a way that it recognizes the intent.

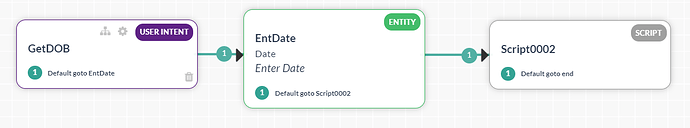

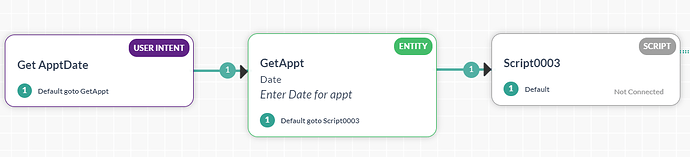

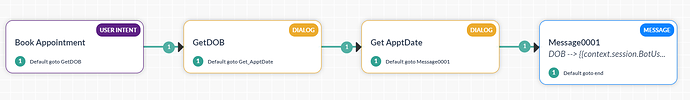

To give a brief idea of my requirement, I am giving screenshot of intent and chat flow here.

The intent BookAppointment and its sub intents are shown below:

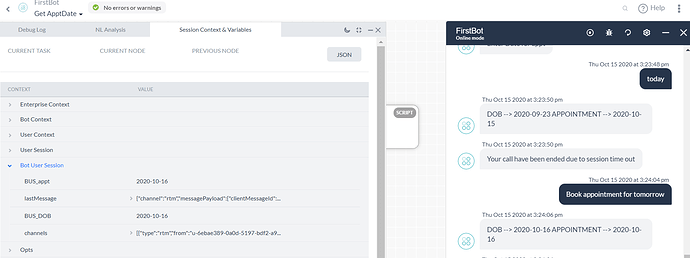

This is screenshot of the conversation and Bot UserSession variables:

Now for Book appointment, I am giving NLP training " Book appointment for tomorrow"

and I want this tomorrow to be understood for appointment date and not for Date of Birth. When I test for this scenario both the date entities take the same value. Is there any way to avoid DOB entity to take any value from these utterances? Since we have separate input for DOB and it should be invoked and given as input by the user every time.

Thanks in advance

The scenario here is not about training but about how dialogs and entities are processed.

Internally Kore keeps track of which words from a user’s utterances are used up by the intent identification and previous entities with the general principle being that each entity, when it is actually processed, first considers unused words. There’s some subtlety to that of course, as entities can reuse intent words or words can be reserved for future use.

This word usage tracking happens within the context of a dialog. When a new dialog is called (like the orange boxes above) then a new dialog context is created and that is seeded with the current state. But importantly, anything to do with word usage and new utterances are not passed back to the calling dialog.

In this example the DOB entity can pick up “tomorrow” as a date, but it doesn’t tell “Book Appointment” which words it used to derive that date, and hence it can’t tell “Get ApptDate” about anything different. So when the GetAppt entity looks for a date then it is seeing the same wide open set of words from the initial utterance. Therefore it comes to the same conclusion about finding a date.

What this means is that this style of building dialogs, while it is very modular and focused on micro intents, is not really the way to build this kind of dialog flow. It is better to have one dialog with the two entity nodes in sequence.

(Incidentally, on your subdialogs you have script nodes at the end; are you aware that a dialog node has a post-assignments block to define where to accept values back from it without the need for script to explicitly set return values?)

As word consumption is significant then is important to consider the order that entities are painted in a flow. An appointment date is very likely to be mentioned in the original utterance so it makes sense to try to extract that first, and not the DOB. Note that entities can always be painted on a dialog twice with the first instance being marked as hidden if you want to have a particular display prompt order for the conversation.

You also mentioned that DOB should be entered all the time. In which case all you need to do is to change the instance properties for that node to “Do not evaluate previous utterances and explicitly prompt the user”.