Hi all, I need some expert advice as I have received mixed messages on the best approach in the past.

We have over 800 intents, approx 300 KG and 500 Dialog Tasks. We’ve been told our bot needs to be split into multiple bots to help improve performance. We’ve also been told that instead of splitting the bot we could convert our tasks to more Parent Tasks and Sub Tasks.

For the ‘splitting the bot’ option - we can’t do that as we already have a Universal bot in our account (SmartAssist instance bot) which ties to our Automation Bot (contains all of the tasks). It is not possible to connect a universal bot to another universal bot, which is what we would need to do if we want to split our one automation bot into multiple child bots.

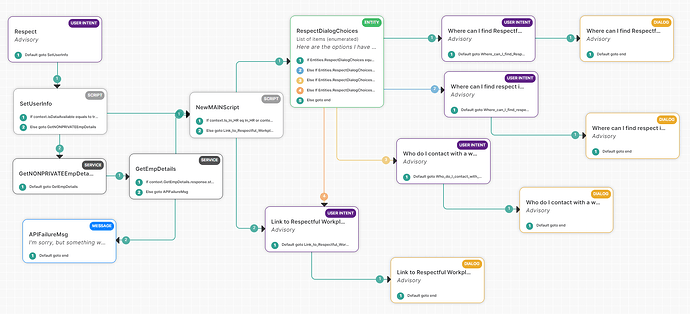

That leaves me with the other suggestion of collapsing our 500 Dialog tasks into parent/child tasks. I envision this as each group of similar dialog tasks (i’ll use an example of different intents related to ‘Respect’ ) - we would create one parent Intent and then tie that parent intent to multiple sub-intents which are not evaluated in the NLP engine.

I have tried designing this and am running into a couple of issues. I want to know if there is a *best practice * way to design these tasks for my use case.

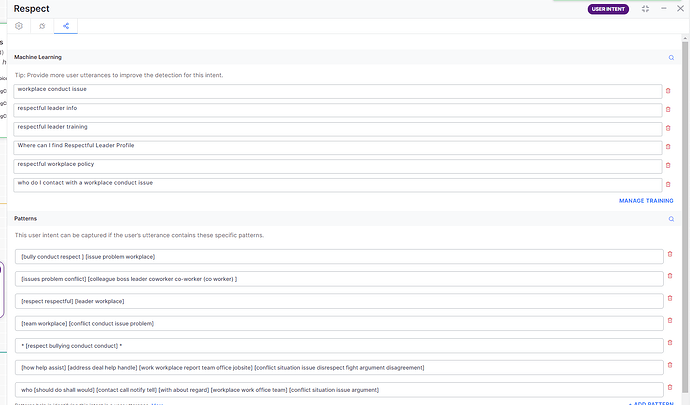

My parent intent is named ‘Respect’. I am adding all the NLP to this intent to funnel all user utterances related to respect through this task and then branch it into sub-tasks using an entity node.

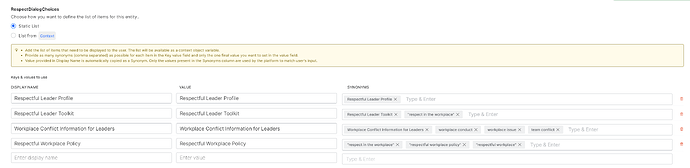

The entity node defines different utterances/phrases/synonyms for each value (using type ‘list of enumerated items’) to direct the user to the task they want. The choices to point to each task are ‘Respectful Leader Toolkit’, ‘Respectful Leader Profile’, 'Workplace Conflict Information for Leaders, ‘Respectful Workplace Policy’ ). Depending on what the user utters it will direct them to the correct sub-dialog task via the entity node. Meaning it is never triggered without flowing through the parent to assist with performance.

When I tested this, I got the correct response for each choice. However the issue is that even though I am pointing to the correct sub-intent , the NLP Intents Found report is not showing that the sub-task was triggered. It only shows ‘Respect’ as the parent and does not show the sub-task that was trigggered.

Then I tried adding in an intent node before the dialog task node. It seemed to work for some but not all utterances on the NLP report. That is what you will see on the screenshots attached, but previously I didnt’ add those intent nodes, only the dialog task nodes.

Now I am back to trying to figure out the right approach. If I do the Parent/Child tasks as shown, the NLP reports aren’t really accurate showing the sub task that was triggered. If I don nothing then we still have a flattened dialog task structure and lose the opportunity to group them together for better performance/intent recognition.

Do any design engineers or experts here know the best way to achieve the outcome I need? I need to improve performance overall. We can’t have more child bots since we already have a universal bot for SmartAssist. We want to continue building out our dialog tasks. We can’t use KG due to the integrations required.

See screenshots for design.

Last question - can I just add the dialog node in my entity connectors, or is there a reason I would need to add an intent node first and then add the dialog task of the same name? I don’t understand the true purpose of the intent node other than to build a new task. Yet you are able to add intent nodes at any point of a flow . Why wouldn’t you just add the dialog node? I haven’t found a clear answer and I’ve read the documentation. Thank you!

Stacy